DevOps - Tools - Cmder

Installing & configuring Cmder on Windows 10

Overview

Cmder

is an awesome

console emulator package for Windows, an essential tool for starting your

DevOps journey on the Windows Platform.

In this blog post join me for a step by step Installation and Configuration of Cmder console emulator, with a few configurations that I like to do to setup my environment when using this tool.

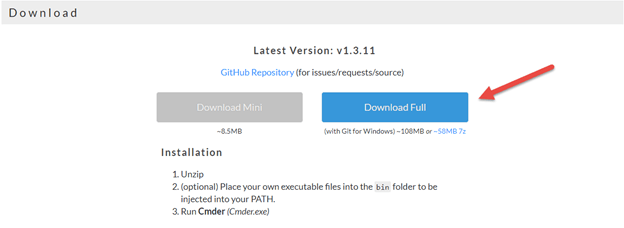

Download Cmder for Windows 10

1.Open up your browser enter the following URL below:

2. On the home page scroll down to the Download Section

3.Select Download Full button

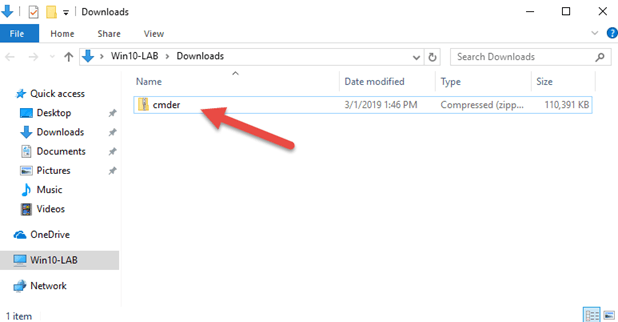

Once the zip file cmder.zip is downloaded

4.Cut and place the cmder.zip file in destination folder - C:\DevOps\Apps

Note - this is just a personal preference for my lab environment, you can unzip the file to your preferred folder path.

To keep things functional placing all my DevOps Tool exe’s in the C:\DevOps\Apps\[Application] path makes it easy to back up everything and restore quickly to another machine.

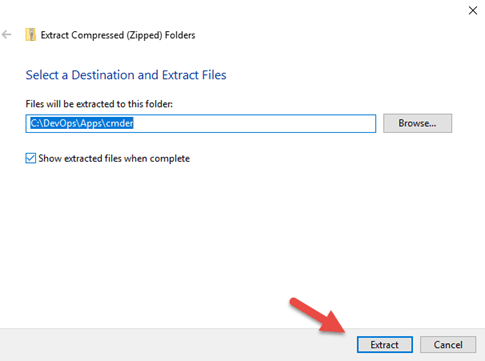

Installing Cmder

Now that we have the cmder.zip file downloaded and moved over the C:\DevOps\Apps folder

Extract the console emulator package from the cmder.zip file

5.Right click on the cmder.zip file using the default zip extract with Windows 10 and click the Extract button

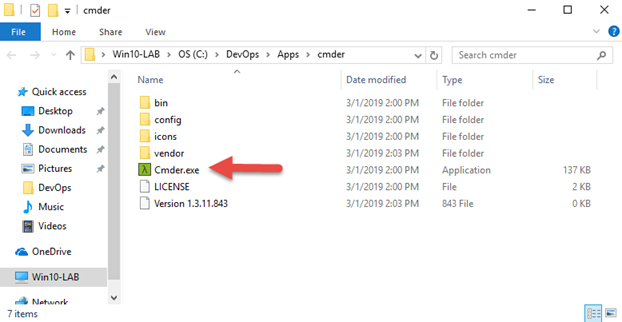

Cmder is now installed in the following folder below:

Configuring cmder

Now the last part of the Installation and base Configuration for the Cmder console emulator tool, is to add the cmder.exe folder path to Computer’s Systems Environment Variable path

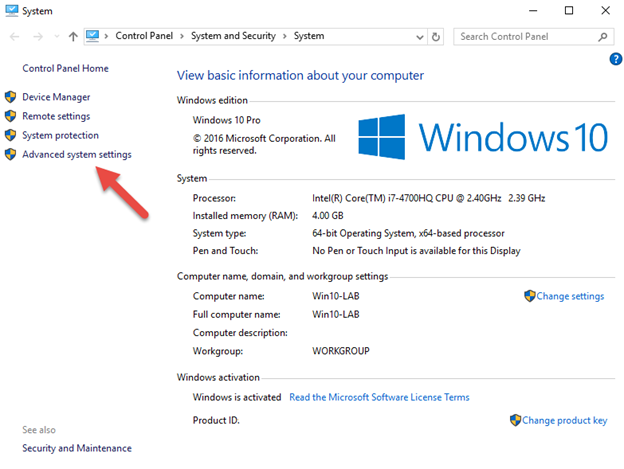

6.Select Win10-LAB Computer on the Desktop, right click select Properties

7.Then select Advance system settings

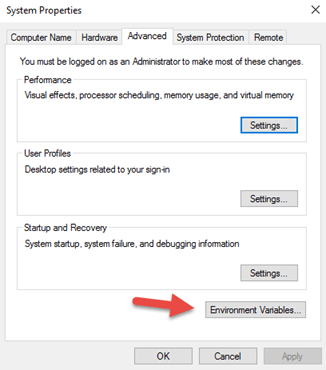

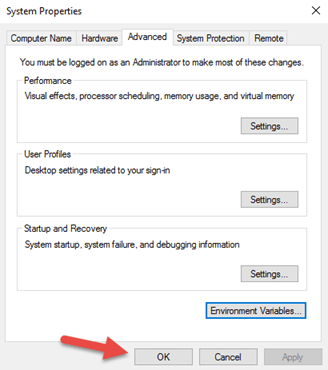

From the System Properties windows

8.Select Environment Variables button

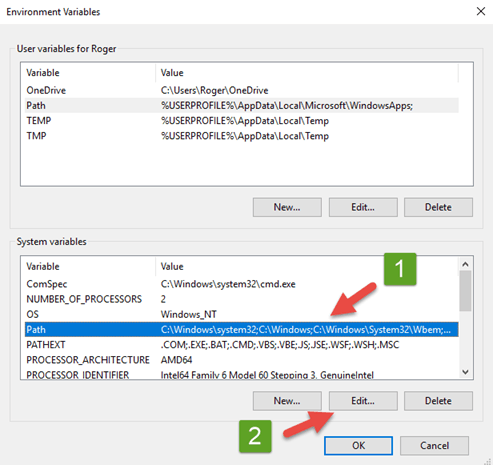

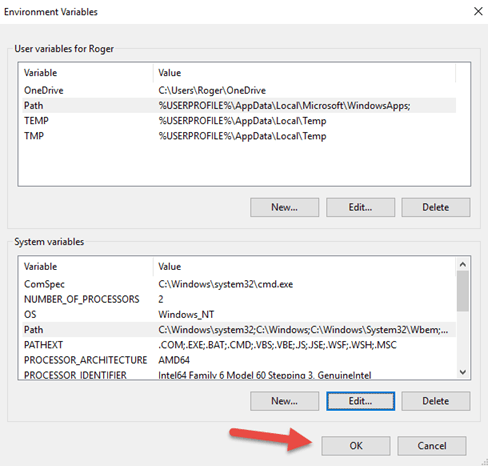

On the Environment Variables window

9.Select Path in the System Variables area, then select the Edit button

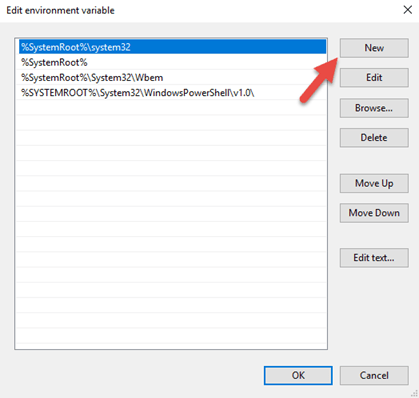

On the Edit environment variable window

10.Select the New button to add a new path

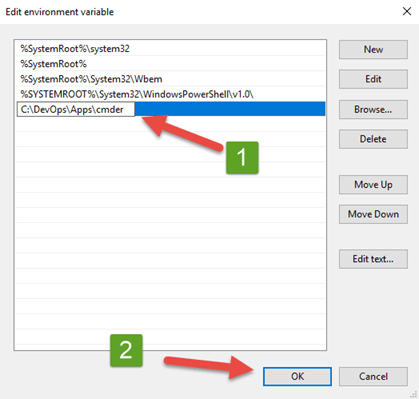

11.Insert the folder path pointing back to the cmder.exe folder path - C:\DevOps\Apps\cmder

12.Click the OK button to add the path and close window

13.Click the OK button to save path update

14.Click the OK button to close the System Properties window

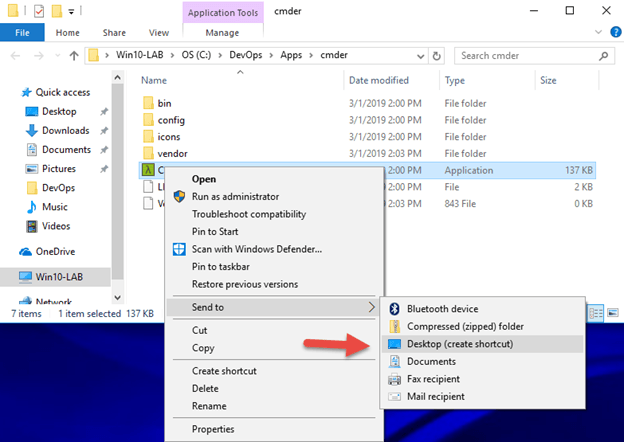

15.Finally, for easy access create a shortcut to the Cmder.exe by using the - Send to Desktop option

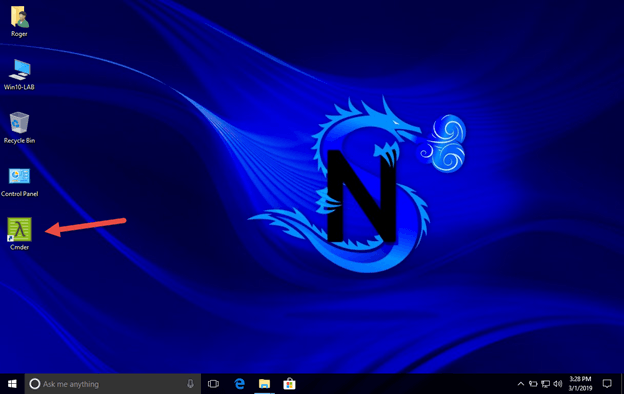

16.So now, let’s check to see if it’s working now double click on the Cmder icon on the desktop

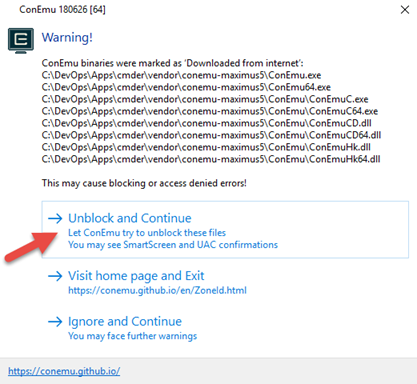

17.Select Unblock and Continue to unblock the files and allow execution of the Cmder.exe

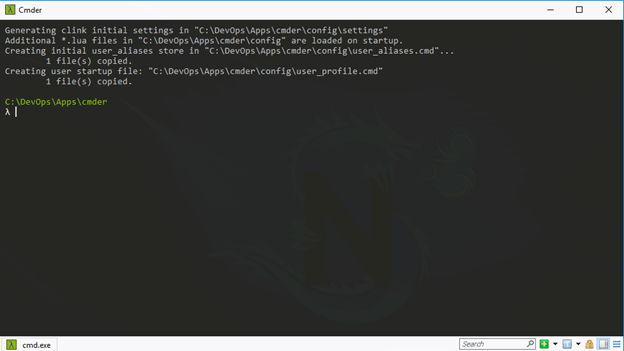

An there you have it, Cmder installed and base configuration completed.

Note - The above window opens the default window Command Prompt

Customized Cmder

Now let’s add a few custom settings to personalize the Win10-LAB environment

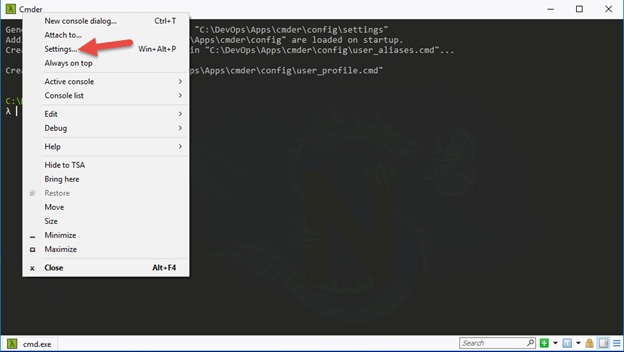

18.On the top of the cmder window right click and select Settings

Modify the Console Color Scheme

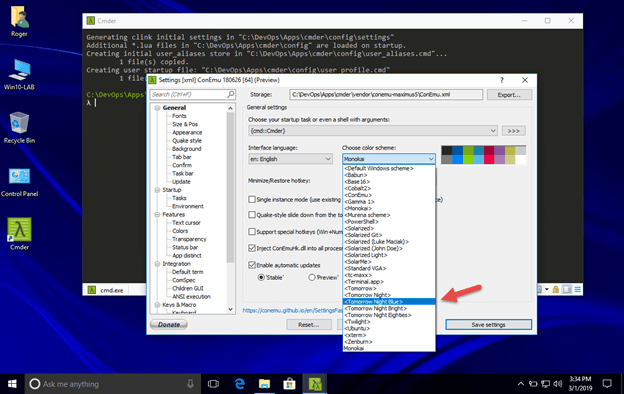

From the cmder Settings Window

19.In the Choose Color Scheme section, select a color scheme, in my case I chose the Tomorrow Night Blue Color Scheme

Customize Environment Shell - PowerShell

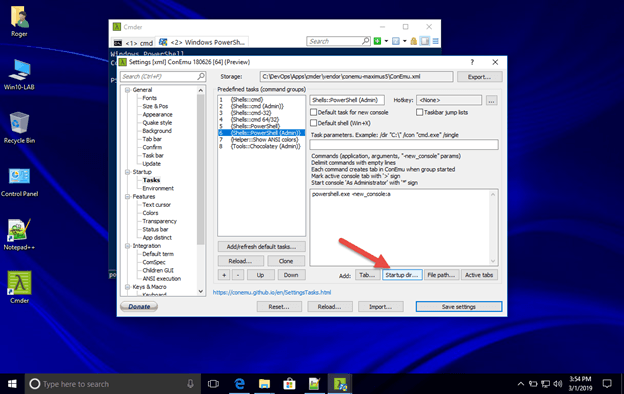

Configuring the Admin PowerShell Shell to start in a specified directory is an option I like setting, it enables me to work within a specified Script directory, which I can later add versioning to with Git for Windows.

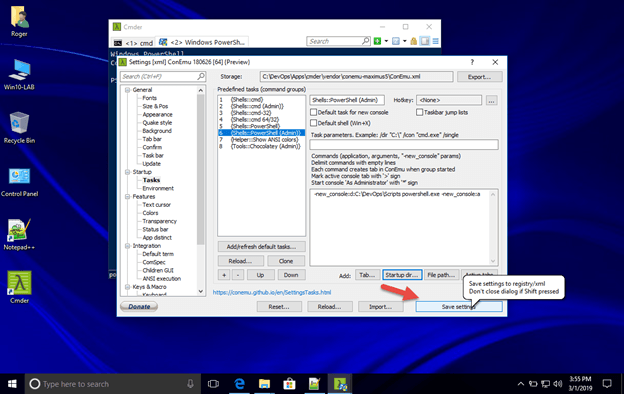

20.In the Startup section under the Predefined tasks (command groups) , select #6 {Shells::PowerShell (Admin)}

To add a new path for the Default folder

21.Click on the Startup Dir… button

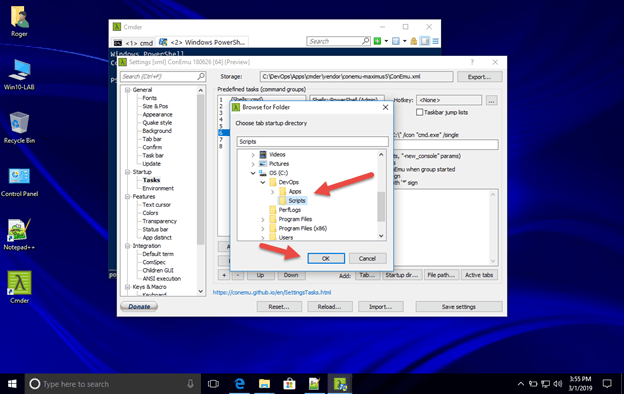

22.Browse to your preferred start up folder - C:\DevOps\Scripts

23.The click the OK button

24.To save the configuration click on the Save Settings button

Test PowerShell Window

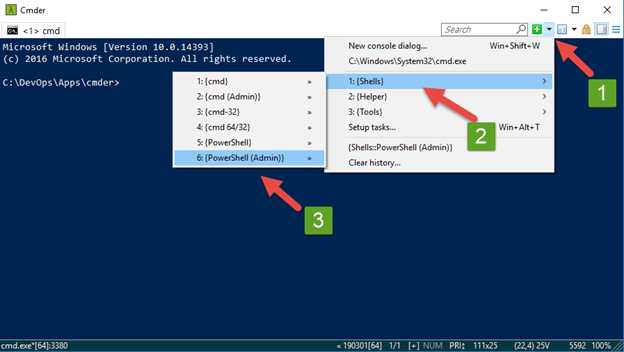

Now that we have made the changes, let’s see what is looks like

25.Click the Arrow to the right of the search box

26.Then Select - 1: {Shells} , and click on 6: {PowerShell (Admin)}

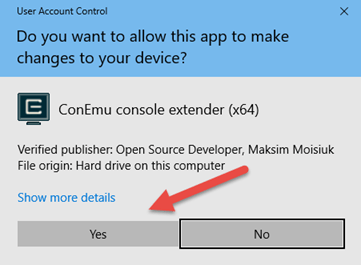

27.Click the Yes button to allow the Shell to run in Administrative mode

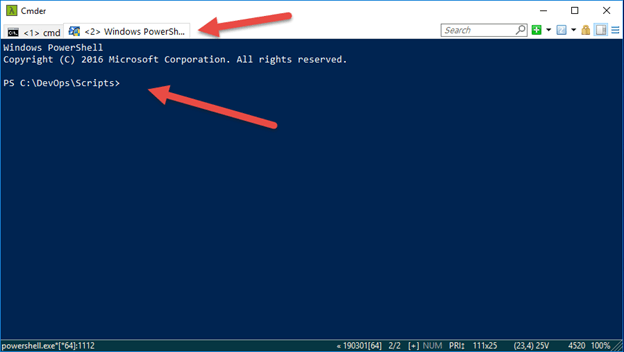

There you go Cmder installed and configured on Windows 10 with the Admin PowerShell shell default start up folder configured.

Hope you enjoyed this step by step journey configuring Cmder a very useful DevOps tool for running multiple consoles.