PowerShell - Create Site collection Inventory Report

How to create a site collection Inventory report

Overview

The goal is to utilize PnP PowerShell to create a script that loops through all the site collections within a specified tenant, and generate a Site Collection Inventory report file.

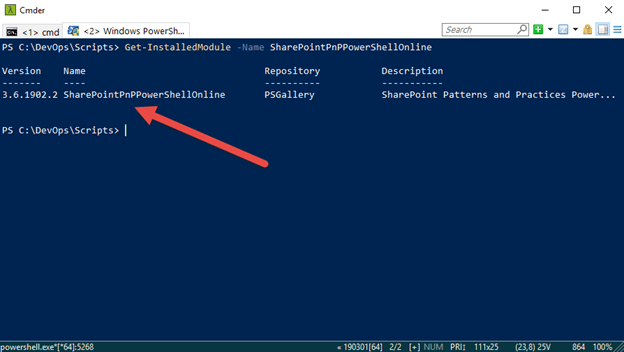

Check Version Installed

First let’s check the version of SharePoint Online PnP PowerShell installed

1. Type the following and press enter:

Get-InstalledModule -Name SharePointPnPPowerShellOnline | select Name,Version

Parameters

-Name - Specifies an array of names of modules to get.

-AllVersions - Indicates that you want to get all available versions of a module.

Finally, once we retrieve the specified Module(s) we can then use the pipe command “ | ” to select the name and version to output to the console display.

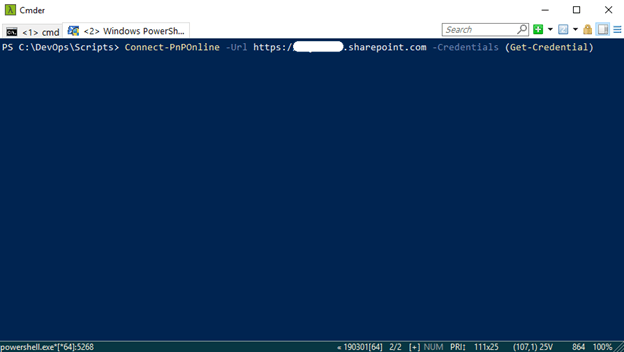

Validate SharePoint Online PnP Connection

Now in order to use the installed Module, you first need to connect to your tenant. To do that we use the following PnP command to connect to a SharePoint site.

2. At the command line enter the following and press Enter:

Connect-PnPOnline –Url

https://[sitename].sharepoint.com

–Credentials (Get-Credential)

Parameters

-Url - The Url of the site collection to connect to.

-Credentials - Credentials of the user to connect with.

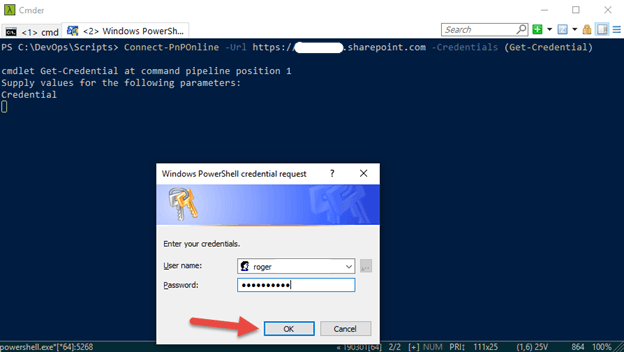

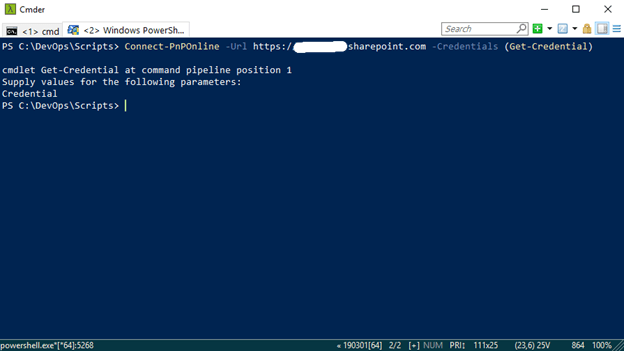

The Windows PowerShell credential request window appears

3.Enter your Microsoft Credentials in the format [name]@domain.com and password and click the OK button

Your now connected to the [sitename].sharepoint.com site specified in the command line below

Script Structure

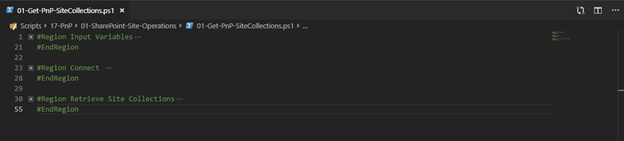

Regions

Ok so now let’s lay out the script structure for this we can use PowerShell Regions .

Regions - are used to structure your code and give parts of the code separated names. Special comments in your scripts can also be used to give structure to your code.

For this script I created 3 Regions

- #Region Input Variables - This area we specify all the input parameters

- #Region Connect - We create a connection to the Microsoft 365 Tenant

- #Region Retrieve Site Collections - Loop through the Site Collections and Export it to a CSV file

Stepping through the Regions below

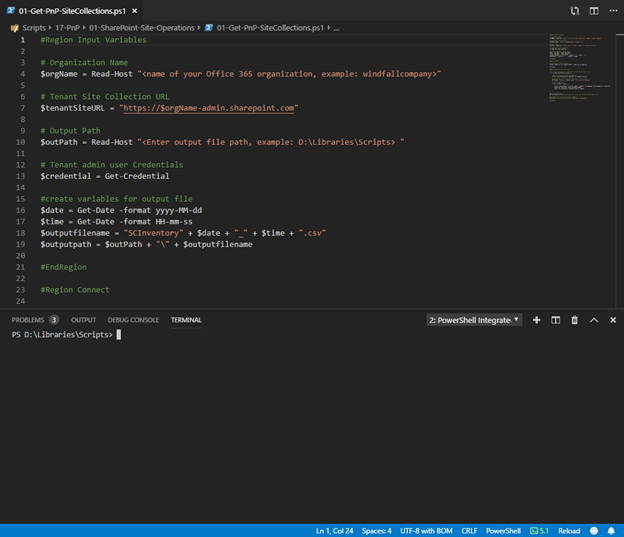

Region Input Variables

#Region Input Variables

# Organization Name - Creating variable called $orgName to hold the requested organization name from the user executing the script

$orgName = Read-Host "<name of your Office 365 organization, example: windfallcompany>"

# Tenant Site Collection URL - Utilizing the above $orgName variable to complete the Tenant Admin URL

$tenantSiteURL = " https://$orgName-admin.sharepoint.com" ;

# Output Path - Enter a file path for the output file to be created

$outPath = Read-Host "<Enter output file path, example: D:\Libraries\Scripts> "

# Tenant admin user Credentials - Enter Tenant Admin Credentials to connect and inventory the site collections

$credential = Get-Credential

#create variables for output file - Creating a simple Timestamp filename output file name

$date = Get-Date -format yyyy-MM-dd

$time = Get-Date -format HH-mm-ss

$outputfilename = "SCInventory" + $date + "_" + $time + ".csv"

$outputpath = $outPath + "\" + $outputfilename

#EndRegion

Region Connect

#Region Connect

# Connects and Creates Context - Utilizing the $credential variable captured earlier we establish a connection to SharePoint Online

Connect-PnPOnline -Url $tenantSiteURL -Credentials $credential

#EndRegion

Region Retrieve Site Collections

#Region Retrieve Site

Collections

# Function - to retrieve site collections from Office 365 tenant site specified

function RetrieveSiteCollections () {

# Retrieves site collections on o365 site - add them all to a $sites variable

$sites = Get-PnPTenantSite -Detailed -IncludeOneDriveSites

# Displays the site collections from tenant on the console - using the [.count] method of the $sites collection

Write-Host "There are " $sites.count " site collections present"

# Loop through Sites - Next we loop through the sites captured in the $sites collection selection Title, URL, Owner….etc

foreach ($site in $sites) {

$site | Select-Object Title, Url, Owner, Template, StorageUsage, SharingCapability, WebsCount, IsHubSite, HubSiteId, LastContentModifiedDate |

Export-Csv $outputpath -NoTypeInformation -Append

}

}

# Calls the Function - Then we call the function

RetrieveSiteCollections # Retrieves site collections from Office 365 tenant site

# Display Site Collection Inventory complete - Finally we show where the output file is saved

Write-Host "Site Collection Inventory Completed in $outputpath"

#EndRegion

Complete Script

#Region Input Variables

# Organization Name

$orgName = Read-Host "<name of your Office 365 organization, example: windfallcompany>"

# Tenant Site Collection URL

$tenantSiteURL = " https://$orgName-admin.sharepoint.com" ;

# Output Path

$outPath = Read-Host "<Enter output file path, example: D:\Libraries\Scripts> "

# Tenant admin user Credentials

$credential = Get-Credential

#create variables for output file

$date = Get-Date -format yyyy-MM-dd

$time = Get-Date -format HH-mm-ss

$outputfilename = "SCInventory" + $date + "_" + $time + ".csv"

$outputpath = $outPath + "\" + $outputfilename

#EndRegion

#Region Connect

# Connects and Creates Context

Connect-PnPOnline -Url $tenantSiteURL -Credentials $credential

#EndRegion

#Region Retrieve Site Collections

# Function to retrieve site collections from Office 365 tenant site specified

function RetrieveSiteCollections () {

# Retrieves site collections on o365 site

$sites = Get-PnPTenantSite -Detailed -IncludeOneDriveSites

# Displays the site collections from tenant on the console

Write-Host "There are " $sites.count " site collections present"

# Loop through Sites

foreach ($site in $sites) {

$site | Select-Object Title, Url, Owner, Template, StorageUsage, SharingCapability, WebsCount, IsHubSite, HubSiteId, LastContentModifiedDate |

Export-Csv $outputpath -NoTypeInformation -Append

}

}

# Calls the Function

RetrieveSiteCollections # Retrieves site collections from Office 365 tenant site

# Display Site Collection Inventory complete

Write-Host "Site Collection Inventory Completed in $outputpath"

#EndRegion

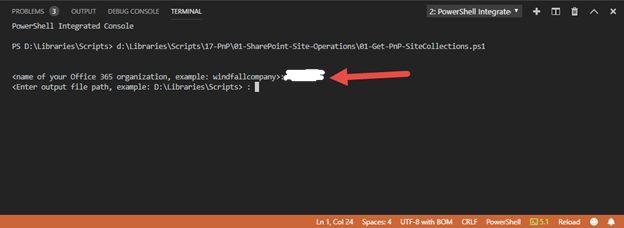

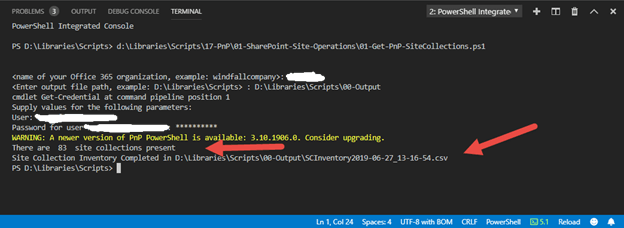

Execute Script

Alright now, let’s load up the script for a test run in Visual Studio Code

Press F5 to Start Debugging

1. Enter the name of the organization

and press enter

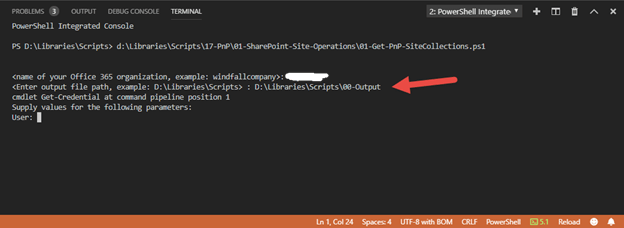

2. Enter output file path - D:\Libraries\Scripts\00-Output and press enter

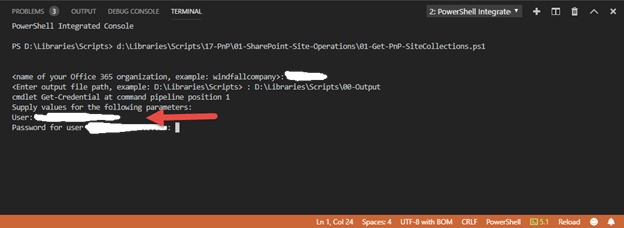

3. Enter Administrative credentials in the format name@domainname.com and press enter

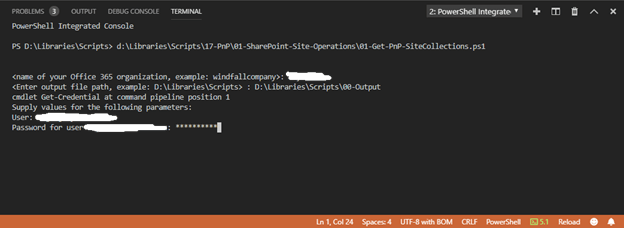

4. Enter Admin password and press enter

5. And the scrip executes giving you a total Site Collection coun t and the path to the output file saved.

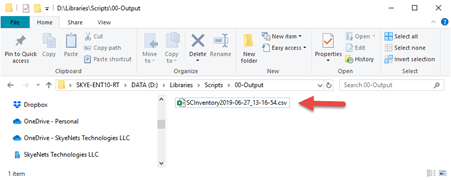

Jumping to file Explorer in Windows 10 , we see the following:

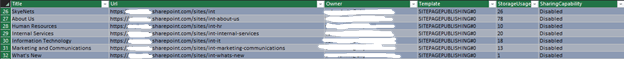

6. Now let’s open the file in Microsoft Excel and apply a little formatting

Summary

So, in this Journey we created a PowerShell script to enumerate all the site collections within the specified organization’s tenant, then we sent the output to a file with the path specified by the user.

Note - This is a basic script, with structured Regions, a more advanced version might also include Error Handling .

Error handling is a very important part of scripting, and one we will cover in a future blog post.

Bye for now 😊!